Collective Intelligence: Foundations + Radical Ideas - Day 2 at SFI

Santa Fe weather, winning hearts while the conference is winning over minds!

The entire series can be accessed here.

Creative problem-solving requires both divergent and convergent thinking. The Collective Intelligence Symposium is the ultimate challenge for thinkers: to expand our understanding of biology, economics, physics, and organizational leadership, then to collide and concatenate those principles into something useful for our daily work.

“Our job in physics is to see things simply...in a unified way, in terms of a few simple principles.”

I've found that two types of conversation partners are drawn to each other here – those whose work is incredibly divergent from each other and those who are solving the same problems.

My work with Abhishek focuses on improving human-AI teaming -- and how leaders can improve their decision-making about how, when, and why to integrate AI tools. After this week, we'll be armed with a whole new toolkit!

Top 3 Insights

1. Environmental Expressions

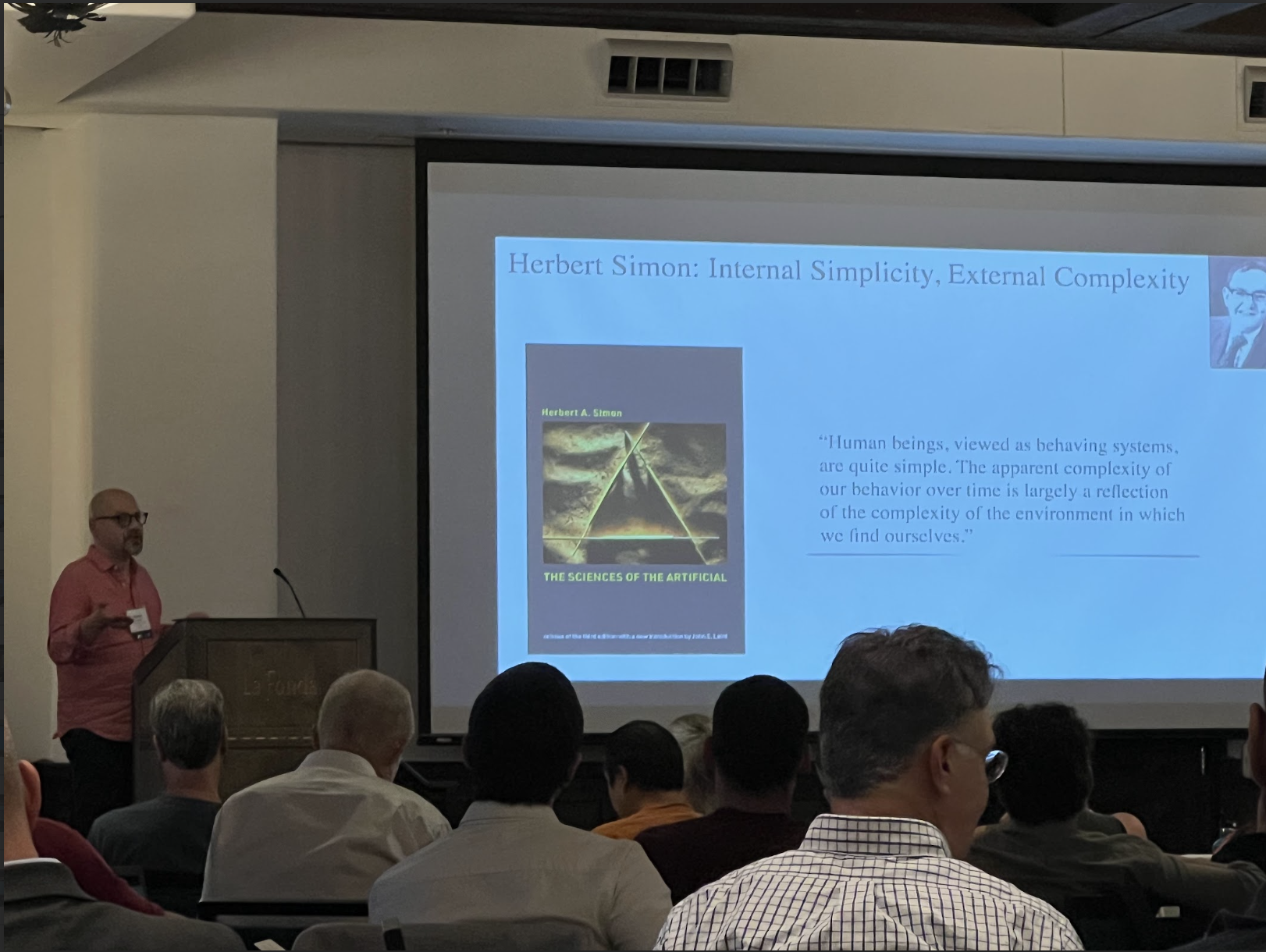

We often consider individuals as more complex expressions of their environments, but the truth is the opposite. In Sciences of the Artificial, Nobel laureate economist Herb Simon wrote that adaptive systems (like people!) function by compressing vast amounts of information about their environments into useful representations that guide their behavior. Our brains – and large language models (LLMs), for example – can efficiently filter out large amounts of irrelevant information into rules for action.

Internal Simplicity, External Complexity - Herbert Simon

2. Compressed Artifacts

Language itself is a compressed artifact, a tool for distilling and expressing lots and lots of tacit knowledge. But that's not all language can do – it also serves as a scaffolding to shape how we understand and think about the world.

3. Consensus on Intelligence

We are a long way from consensus about how to define or measure intelligence. Complexity scientists like Melanie Mitchell argue that current benchmarks for assessing how well AIs can understand the world fail to capture true intelligence's critical processes.

Humans of SFI CI

Kyle Killian is the Emerging Data and Modeling Lead for the National Geospatial-Intelligence Agency. His work focuses on identifying and applying better modeling techniques to help the intelligence community understand, integrate, and prepare for novel AI capabilities.

Casey Cox directs SFI's Applied Complexity network (of which Abhishek and I are members), which integrates private sector leaders and insights into the intellectual life of the Institute. Casey has a remarkable ability to assemble and facilitate high-impact group (can I say intelligent collectives'?) collaborations. She works to close gaps between scientists and practitioners so that the scientists can gain feedback from the front lines and so practitioners can gain the insights they need to pull ahead.

Renewed conversation with Ted Chiang with whom I had a fascinating discussion on intelligence the day before!

Mike Walton helps the US Census Bureau discover and apply highly innovative applications for its rich dataset. In an age where data is king, the Census Bureau has remarkable potential to support better models of key societal challenges!

Two Favorite Lectures

In "The Nature of Collective Intelligence in Economic Settings," physicist Cesar Hidalgo shared compelling (and visually beautiful) models of collective intelligence flows in economies. What surprised me most was his conclusion that despite the popularity of remote work, *knowledge is non-fungible,* and locations that make major knowledge contributions (like patents) carry significant area effects that encourage the people who live there to participate.

In "The Meaning of Intelligence in AI," Melanie Mitchell played a myth-buster to various over-hyped AI performance claims. Did GPT-4 really hire a human worker to complete a CAPTCHA? When LLMs pass class exams, do they actually understand the material? I particularly enjoyed Melanie's presentation style, which blends intellectual rigor, up-to-the-minute examples, and humor.

Looking forward to …

How we understand intelligence is a big deal. Until we reach a consensus on intelligence and how to measure it, we wander around in the dark on many of life's most important questions. How can organizations find, retain, and support intelligent collectives that are greater than the sum of their parts? How can AI progress and its attendant risks be meaningfully measured? I look forward to further debates between SFI thinkers today and hope to return with more information for you tomorrow!